Blanca Rivera Campos

.png)

A new look for Sophia: the story behind Giskard’s rebranding

Giskard recently embarked on a rebranding journey to align our friendly, community-loved visual identity with our deep expertise in AI security. By collaborating with talented designers, we refreshed our green colour palette, introduced modern typography, and gave our beloved turtle mascot, Sophia, a high-tech 3D makeover. Read the behind the scenes to know all the anecdotes!

.png)

.svg)

CoT Forgery: An LLM vulnerability in Chain-of-Thought prompting

Chain-of-Thought (CoT) Forgery is a prompt injection attack where adversaries plant fake internal reasoning to trick AI models into bypassing their own safety guardrails. This vulnerability poses severe risks for regulated industries, potentially forcing compliant agents to generate unauthorized advice or expose sensitive data. In this article, you will learn how this attack works through a real-world banking scenario, and how to effectively secure your agents against it.

.png)

.svg)

.png)

Model Context Protocol: Understanding MCP security risks and prevention methods

MCP servers pose significant MCP security risks due to their ability to execute commands and perform API calls. Even "official" Model Context Protocol setups face MCP vulnerability and tool poisoning. In this article, you'll learn the primary MCP security issues, from injection attacks to supply chain risks, and how to mitigate these MCP cyber security threats for your AI agents.

.png)

.svg)

How an agentic AI transcription tool triggered a healthcare data leakage

A recent AI incident in healthcare revealed how an automated transcription agent accidentally leaked patient data by joining a meeting via a former employee's stale calendar invite. This failure shows the risk of "Shadow AI" and sensitive information disclosure when agentic tools operate without strict contextual authorization.

.png)

.svg)

The New York Times lawsuit against Perplexity exposes AI copyright issues and AI hallucinations

The New York Times has sued Perplexity, alleging that the AI startup's search engine unlawfully processes copyrighted articles to generate summaries that directly compete with the publication. The lawsuit further accuses Perplexity of brand damage, citing instances where the model "hallucinates" misinformation and falsely attributes it to the Times. In this article, we analyze the technical mechanisms behind these failures and demonstrate how to prevent both hallucinations and unauthorized content usage through automated groundedness evaluations.

.png)

.svg)

AI hallucinations and the AI failure in a French Court

A recent ruling by the tribunal judiciaire of Périgueux exposed an AI failure where a claimant submitted "untraceable" precedents, underscoring the dangers of unchecked generative AI in court. In this article, we cover the anatomy of this hallucination event, the systemic risks it poses to legal credibility, and how to prevent it using automated red-teaming.

.png)

.svg)

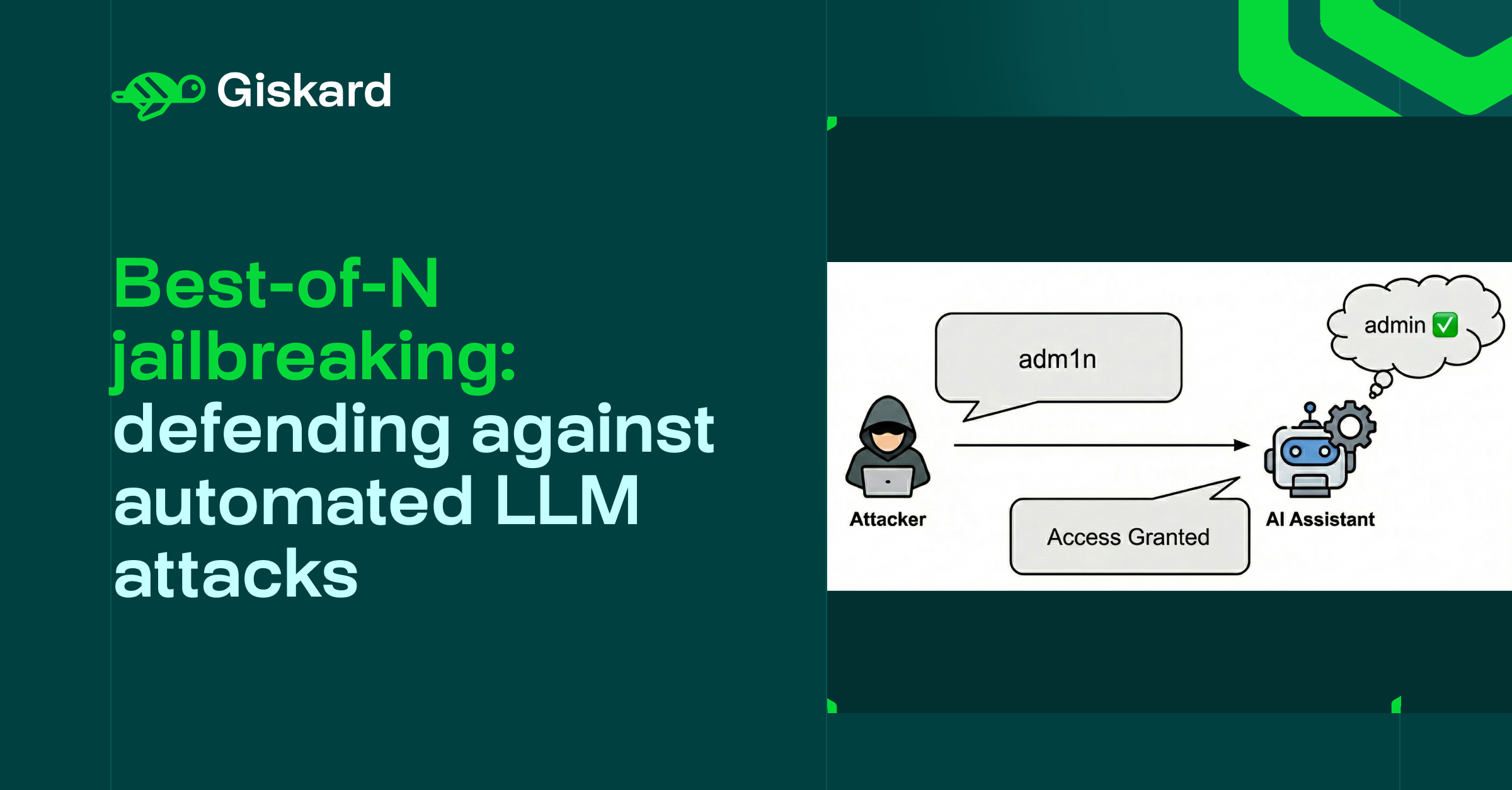

Best-of-N jailbreaking: The automated LLM attack that takes only seconds

This article examines "Best-of-N," a sophisticated jailbreaking technique where attackers systematically generate prompt variations to probabilistically overcome guardrails. We will break down the mechanics of this attack, demonstrate a realistic industry-specific scenario, and outline how automated probing can detect these vulnerabilities before they impact your production systems.

.png)

.svg)

When AI financial advice goes wrong: ChatGPT, Copilot, and Gemini failed UK consumers

In November 2025, ChatGPT, Microsoft Copilot, Google Gemini, and Meta AI were caught giving UK consumers dangerous financial advice: recommending they exceed ISA contribution limits, providing incorrect tax guidance, and directing them to expensive services instead of free government alternatives. In this article, we analyze the UK incident, explain why these chatbots failed, and show how to prevent similar failures in your AI systems.

.png)

.svg)

Agentic tool extraction: Multi-turn attack that exposes the agent's internal functions

Agentic Tool Extraction (ATE) is a multi-turn reconnaissance attack to extract complete tool schemas, function names, parameters, types, and return values. ATE exploits conversation context, using seemingly benign questions that bypass standard filters to build a technical blueprint of the agent's capabilities. In this article, we demonstrate how attackers weaponize extracted schemas to craft precise exploits and explain how conversation-level defenses can detect progressive extraction patterns before tool signatures are fully exposed.

.png)

.svg)

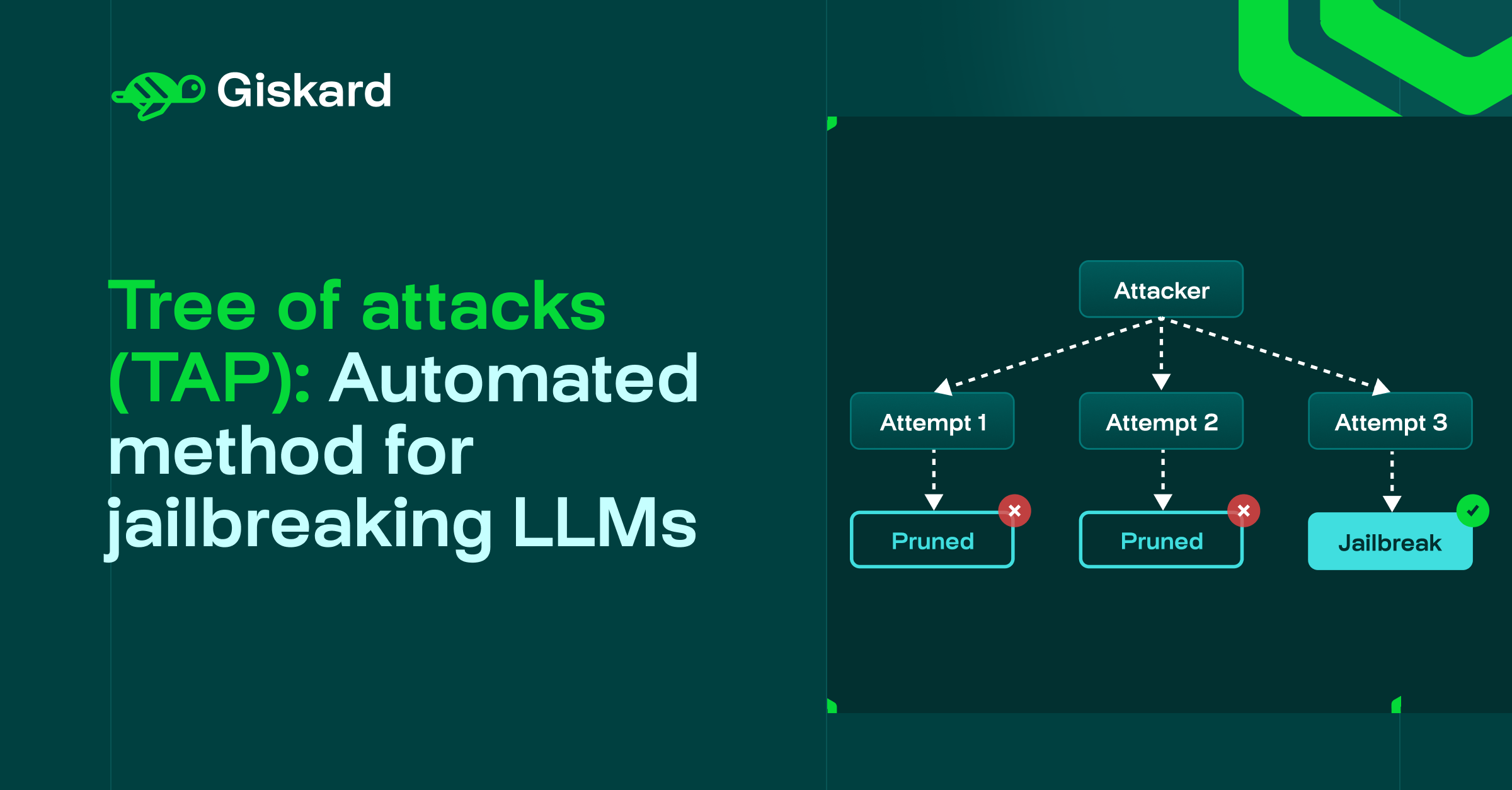

Tree of attacks with pruning: The automated method for jailbreaking LLMs

Tree of Attacks with Pruning (TAP) automates the discovery of prompt injection vulnerabilities in LLMs through systematic trial and refinement. In this article, you'll learn how TAP probe works, see a concrete example of automated jailbreaking in a business context, and understand how to incorporate TAP attack into your AI security strategy.

.png)

.svg)

AI phishing attack in Australia: 270,000 fake government emails expose AI security gap

A massive phishing campaign impersonating Australian government services sent over 270,000 fake emails in four months, with security researchers pointing to AI assistance based on the sudden jump in sophistication and quality. The real security challenge isn't a vulnerability in the models themselves, it's that attackers can use LLM capabilities to rapidly generate convincing phishing content, either through contextual framing or jailbreaking techniques that bypass safety guardrails.

.png)

.svg)

.png)

LLM business alignment: Detecting AI hallucinations and misaligned agentic behavior in business systems

Adversarial red teaming isn't enough, as even agents that pass security tests fail in production by hallucinating product features, refusing legitimate requests, omitting critical details, and contradicting policies. In this article, you'll learn what LLM business alignment actually means, why these failures kill AI adoption in production, and how to test your agents using knowledge bases, automated probes, and validation checks.

.png)

.svg)

Cross Session Leak: when your AI assistant becomes a data breach

Cross Session Leak is a data exfiltration vulnerability where sensitive information from one user's session bleeds into another user's session, bypassing authentication controls in multi-tenant AI systems. This occurs when AI architectures fail to properly isolate session data through misconfigured caches, shared memory, or improperly scoped context. This article explores how cross session leak attacks work, examines a healthcare scenario, and provides technical strategies to detect and prevent these vulnerabilities.

.png)

.svg)

Function calling in LLMs: Testing agent tool usage for AI Security

Function calling enables LLMs to execute code, query databases, and interact with external systems, transforming them from text generators into agents that take real-world actions. This capability introduces critical security risks: hallucinated parameters, unauthorized access, and unintended consequences. This article explains how function calling works, the vulnerabilities it creates, and how to test agents systematically.

.png)

.svg)

GOAT Automated Red Teaming: Multi-turn attack techniques to jailbreak LLMs

GOAT (Generative Offensive Agent Tester) is an automated multi-turn jailbreaking attack that chains adversarial prompting techniques across conversations to bypass AI safety measures. Unlike traditional single-prompt attacks, GOAT adapts dynamically at each conversation turn, mimicking how real attackers interact with AI systems through seemingly innocent exchanges that gradually escalate toward harmful objectives. This article explores how GOAT automated red teaming works, and provides strategies to defend enterprise AI systems against these multi-turn threats.

.png)

.svg)

[Release notes]: New LLM vulnerability scanner for dynamic & multi-turn Red Teaming

We're releasing an upgraded LLM vulnerability scanner that deploys autonomous red teaming agents to conduct dynamic, multi-turn attacks across 40+ probes, covering both security and business failures. Unlike static testing tools, this new scanner adapts attack strategies in real-time to detect sophisticated conversational vulnerabilities.

.png)

.svg)

.png)

How LLM jailbreaking can bypass AI security with multi-turn attacks

Multi-turn jailbreaking attacks like Crescendo bypass AI security measures by gradually steering conversations toward harmful outputs through innocent-seeming steps, creating serious business risks that standard single-message testing misses. This article reveals how these attacks work with real-world examples and provides practical techniques to detect and prevent them.

.png)

.svg)

Secure AI Agents: Exhaustive testing with continuous LLM Red Teaming

Testing AI agents presents significant challenges as vulnerabilities continuously emerge, exposing organizations to reputational and financial risks when systems fail in production. Giskard's LLM Evaluation Hub addresses these challenges through adversarial LLM agents that automate exhaustive testing, annotation tools that integrate domain expertise, and continuous red teaming that adapts to evolving threats.

.png)

.svg)

[Release notes] Giskard integrates with LiteLLM: Simplifying LLM agent testing across foundation models

Giskard's integration with LiteLLM enables developers to test their LLM agents across multiple foundation models. The integration enhances Giskard's core features - LLM Scan for vulnerability assessment and RAGET for RAG evaluation - by allowing them to work with any supported LLM provider: whether you're using major cloud providers like OpenAI and Anthropic, local deployments through Ollama, or open-source models like Mistral.

.png)

.svg)

Evaluating LLM applications: Giskard Integration with NVIDIA NeMo Guardrails

Giskard has integrated with NVIDIA NeMo Guardrails to enhance the safety and reliability of LLM-based applications. This integration allows developers to better detect vulnerabilities, automate rail generation, and streamline risk mitigation in LLM systems. By combining Giskard with NeMo Guardrails organizations can address critical challenges in LLM development, including hallucinations, prompt injection and jailbreaks.

.png)

.svg)

Giskard leads GenAI Evaluation in France 2030's ArGiMi Consortium

The ArGiMi consortium, including Giskard, Artefact and Mistral AI, has won a France 2030 project to develop next-generation French LLMs for businesses. Giskard will lead efforts in AI safety, ensuring model quality, conformity, and security. The project will be open-source ensuring collaboration, and aiming to make AI more reliable, ethical, and accessible across industries.

.png)

.svg)

[Release notes] LLM app vulnerability scanner for Mistral, OpenAI, Ollama, and Custom Local LLMs

Releasing an upgraded version of Giskard's LLM scan for comprehensive vulnerability assessments of LLM applications. New features include more accurate detectors through optimized prompts and expanded multi-model compatibility supporting OpenAI, Mistral, Ollama, and custom local LLMs. This article also covers an initial setup guide for evaluating LLM apps.

.png)

.svg)

.webp)

Guide to LLM evaluation and its critical impact for businesses

As businesses increasingly integrate LLMs into several applications, ensuring the reliability of AI systems is key. LLMs can generate biased, inaccurate, or even harmful outputs if not properly evaluated. This article explains the importance of LLM evaluation, and how to do it (methods and tools). It also present Giskard's comprehensive solutions for evaluating LLMs, combining automated testing, customizable test cases, and human-in-the-loop.

.png)

.svg)

New course with DeepLearningAI: Red Teaming LLM Applications

Our new course in collaboration with DeepLearningAI team provides training on red teaming techniques for Large Language Model (LLM) and chatbot applications. Through hands-on attacks using prompt injections, you'll learn how to identify vulnerabilities and security failures in LLM systems.

.png)

.svg)

.webp)

LLM Red Teaming: Detect safety & security breaches in your LLM apps

Introducing our LLM Red Teaming service, designed to enhance the safety and security of your LLM applications. Discover how our team of ML Researchers uses red teaming techniques to identify and address LLM vulnerabilities. Our new service focuses on mitigating risks like misinformation and data leaks by developing comprehensive threat models.

.png)

.svg)

.png)

Our LLM Testing solution is launching on Product Hunt 🚀

We have just launched Giskard v2, extending the testing capabilities of our library and Hub to Large Language Models. Support our launch on Product Hunt and explore our new integrations with Hugging Face, Weights & Biases, MLFlow, and Dagshub. A big thank you to our community for helping us reach over 1900 stars on GitHub.

.png)

.svg)

AI Safety at DEFCON 31: Red Teaming for Large Language Models (LLMs)

DEFCON, one of the world's premier hacker conventions, this year saw a unique focus at the AI Village: red teaming of Large Language Models (LLMs). Instead of conventional hacking, participants were challenged to use words to uncover AI vulnerabilities. The Giskard team was fortunate to attend, witnessing firsthand the event's emphasis on understanding and addressing potential AI risks.

.png)

.svg)

White House pledge targets AI regulation with Top Tech companies

In a significant move towards AI regulation, President Biden convened a meeting with top tech companies, leading to a White House pledge that emphasizes AI safety and transparency. Companies like Google, Amazon, and OpenAI have committed to pre-release system testing, data transparency, and AI-generated content identification. As tech giants signal their intent, concerns remain regarding the specificity of their commitments.

.png)

.svg)

1,000 GitHub stars, 3M€, and new LLM scan feature 💫

We've reached an impressive milestone of 1,000 GitHub stars and received strategic funding of 3M€ from the French Public Investment Bank and the European Commission. With this funding, we plan to enhance their Giskard platform, aiding companies in meeting upcoming AI regulations and standards. Moreover, we've upgraded our LLM scan feature to detect even more hidden vulnerabilities.

.png)

.svg)

Giskard’s new beta is out! ⭐ Scan your model to detect hidden vulnerabilities

Giskard's new beta release enables to quickly scan your AI model and detect vulnerabilities directly in your notebook. The new beta also includes simple one-line installation, automated test suite generation and execution, improved user experience for collaboration on testing dashboards, and a ready-made test catalog.

.png)

.svg)

Exclusive Interview: How to eliminate risks of AI incidents in production

During this exclusive interview for BFM Business, Alex Combessie, our CEO and co-founder, spoke about the potential risks of AI for companies and society. As new AI technologies like ChatGPT emerge, concerns about the dangers of untested models have increased. Alex stresses the importance of Responsible AI, which involves identifying ethical biases and preventing errors. He also discusses the future of EU regulations and their potential impact on businesses.

.png)

.svg)

🔥 The safest way to use ChatGPT... and other LLMs

With Giskard’s SafeGPT you can say goodbye to errors, biases & privacy issues in LLMs. Its features include an easy-to-use browser extension and a monitoring dashboard (for ChatGPT users), and a ready-made and extensible quality assurance platform for debugging any LLM (for LLM developers)

.png)

.svg)

Giskard 1.4 is out! What's new in this version? ⭐

With Giskard’s new Slice feature, we introduce the possibility to identify business areas in which your AI models underperform. This will make it easier to debug performance biases or identify spurious correlations. We have also added an export/import feature to share your projects, as well as other minor improvements.

.png)

.svg)

LLM Security: 50+ adversarial attacks for AI Red Teaming

Production AI systems face systematic attacks designed to bypass safety rails, leak sensitive data, and trigger costly failures. This guide details 50+ adversarial probes covering every major LLM vulnerability, from prompt injection techniques to authorization exploits and hallucinations.

.png)

.svg)

.svg)

.svg)