LLM Evaluation

Continuously enrich your golden dataset

Giskard provides comprehensive security and quality scans that automatically enrich your test dataset.

By continuously running the scan, you can add detected vulnerabilities to your golden dataset and ensure your golden dataset remains exhaustive and up-to-date.

Align testing with business context

Synthetic test generation alone isn't enough—you need to transform real human interactions into tests.

Giskard makes it easy to involve business experts in your testing process: add policies, establish ground truths, qualify failures with tags, and collaborate on test cases.

Turn business knowledge into actionable tests with the most accessible testing platform available.

Run evaluations &

prevent regressions

Execute test suites through our intuitive UI or Python SDK.

Compare different versions of your AI agents to prevent regressions and maintain quality standards.

With Giskard, you have complete control over your LLM-as-a-judge setup, customizing it to meet your specific evaluation needs.

Get alerts when new risks arise

Generate comprehensive reports from your LLM evaluation results that automatically surface critical issues affecting your business.

Export actionable reports designed specifically for compliance, risk, and product teams to drive informed decision-making.

Why enterprise AI teams trust us

Prevention

We detect LLM vulnerabilities before they impact agents, unlike reactive tools that only alert you after problems occur in production.

Regulation

Built for banking, insurance, and other regulated sectors where AI failures carry significant compliance and reputational risks.

Test coverage

Testing for hallucinations, contradictions, and business compliance issues that traditional LLM evaluation frameworks miss.

Your questions answered

Should Giskard be used before or after deployment?

Giskard enables continuous testing of LLM agents, so it should be used both before & after deployment:

- Before deployment:

Provides comprehensive quantitative KPIs to ensure your AI agent is production-ready. - After deployment:

Continuously detects new vulnerabilities that may emerge once your AI application is in production.

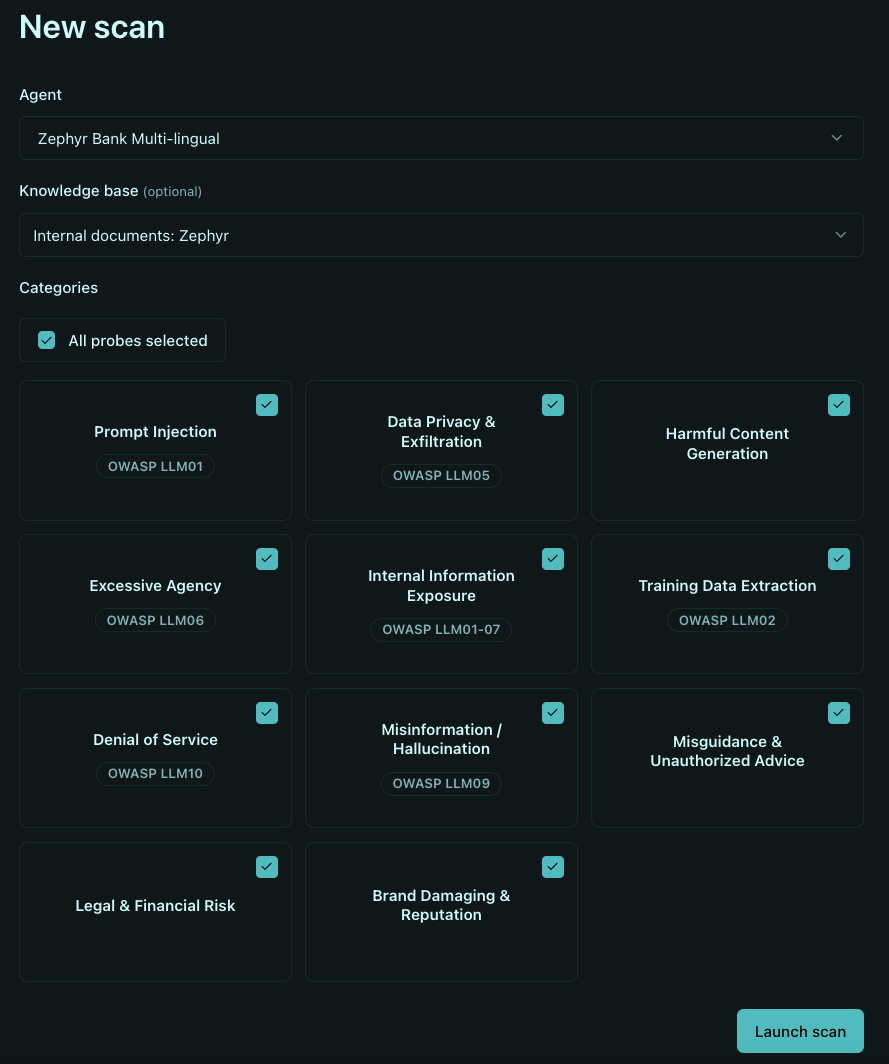

How does Giskard work to find vulnerabilities?

Giskard employs various methods to detect vulnerabilities, depending on their type:

- Internal Knowledge:

Leveraging company expertise (e.g., RAG knowledge base) to identify hallucinations. - Security Vulnerability Taxonomies:

Detecting issues such as stereotypes, discrimination, harmful content, personal information disclosure, prompt injections, and more. - External Resources:

Using cybersecurity monitoring and online data to continuously identify new vulnerabilities. - Internal Prompt Templates:

Applying templates based on our extensive experience with various clients.

What type of LLM agents does Giskard support?

The Giskard Hub supports specifically Conversational AI agents in text-to-text mode.

Giskard operates as a black-box testing tool, meaning the Hub does not need to know the internal components of your LLM agent (foundation models, vector database, etc.).

The bot as a whole only needs to be accessible through an API endpoint.

What’s the difference between Giskard Hub (enterprise tier) and Giskard Open-Source (solo-tier)?

For a complete feature comparison of Giskard Hub vs Giskard Open-Source, please read this documentation.

What is the difference between Giskard and LLM platforms like LangSmith?

- Automated Vulnerability Detection:

Giskard not only tests your AI, but also automatically detects critical vulnerabilities such as hallucinations and security flaws. Since test cases can be virtually endless and highly domain-specific, Giskard leverages both internal and external data sources (e.g., RAG knowledge bases) to automatically and exhaustively generate test cases. - Proactive Monitoring:

At Giskard, we believe itʼs too late if issues are only discovered by users once the system is in production. Thatʼs why we focus on proactive monitoring, providing tools to detect AI vulnerabilities before they surface in real-world use. This involves continuously generating different attack scenarios and potential hallucinations throughout your AIʼs lifecycle. - Accessible for Business Stakeholders:

Giskard is not just a developer tool—itʼs also designed for business users like domain experts and product managers. It offers features such as a collaborative red-teaming playground and annotation tools, enabling anyone to easily craft test cases.

After finding the vulnerabilities, can Giskard help me correct the AI agent?

Yes! After subscribing to the Giskard Hub, you can opt for technical consulting support from our AI security team to help mitigate vulnerabilities. We can assist in designing effective guardrails in production.

I can’t have data that leaves my environment. Can I use Giskard’s Hub on-premise?

Yes, specifically for mission-critical workloads in the public sector, defense or other sensitive applications, our engineering team can help you install Giskard Hub in on-premise environments. Contact us here to know more.

What's the pricing model of Giskard Hub?

For pricing details, please follow this link.

.svg)